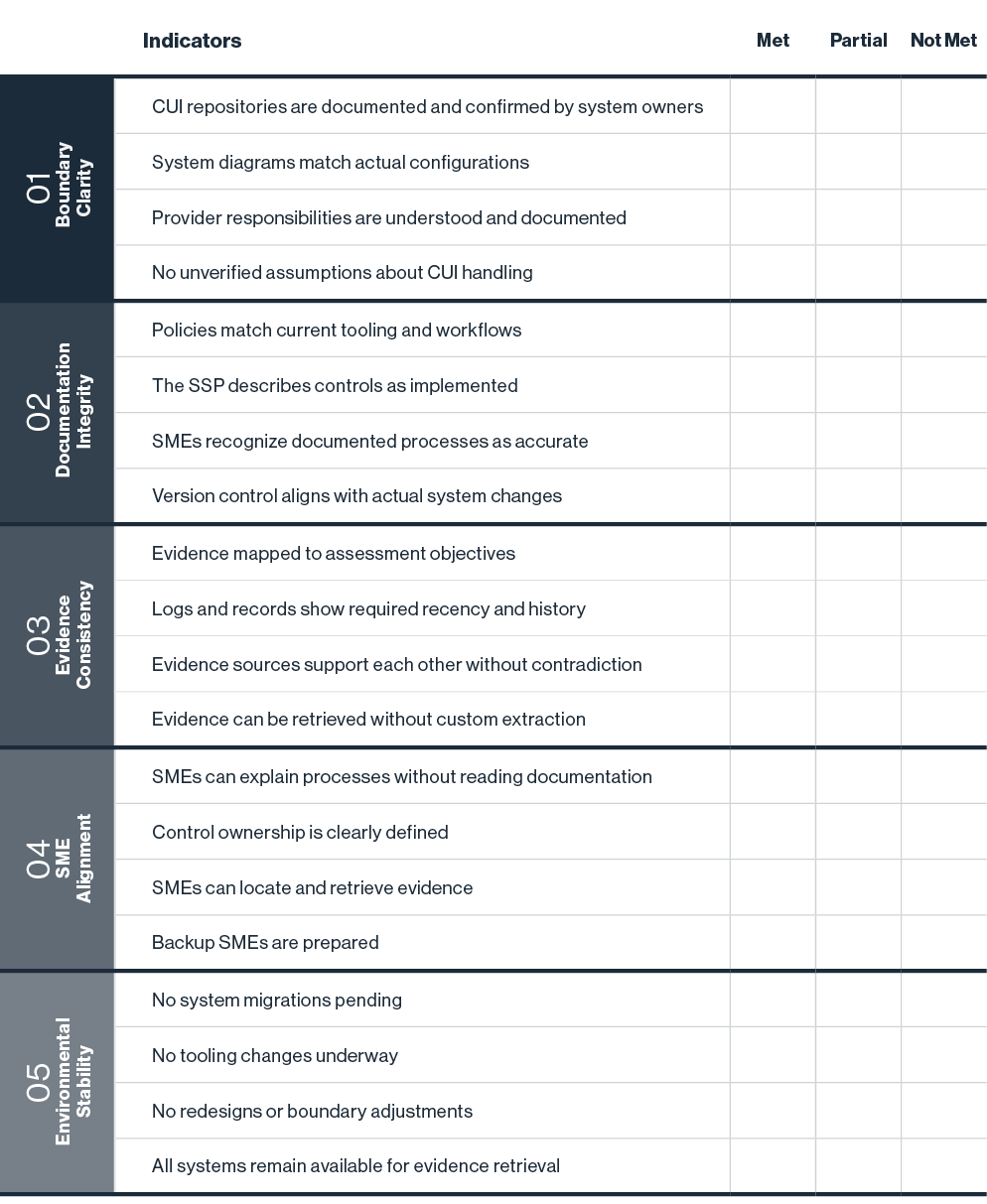

“We have seen several OSCs (Organizations Seeking Certification) that either didn’t have enough of the right documentation, evidence, or scope provided and defined during our readiness review. This has caused rescheduling activities that have pushed assessments out for several months."